The graphics card, or GPU (Graphics Processing Unit), has become an essential component in modern computing, powering everything from video games to artificial intelligence. But this technology didn’t emerge overnight. It has undergone a series of transformative innovations, evolving from simple processors designed to handle basic visuals to the complex and powerful units we see today. This article will take you on a journey through the history of the graphics card, covering key developments, important milestones, and the revolutionary impact it has had on technology and industries worldwide.

The Early Days of Graphics Processing

1. The Beginnings of Computer Graphics (1960s-1970s)

The origins of computer graphics can be traced back to the 1960s when computers were used primarily for scientific calculations. Early graphical output was limited to simple text-based visuals, often printed on paper, and there were no dedicated hardware components for rendering images. These first attempts at generating computer images involved mainframe computers like the IBM 7090, which could display rudimentary line drawings on devices like cathode-ray tube (CRT) screens.

In this era, the term “graphics card” as we know it today didn’t exist. Instead, any kind of image generation or display was handled by central processors, with specialized graphics hardware being virtually non-existent. This limited the complexity of visuals that could be produced.

However, the emergence of interactive graphics began to take hold with systems such as Ivan Sutherland’s Sketchpad, which introduced the concept of graphical interaction between a user and a computer. This set the stage for more focused developments in the field of computer graphics, particularly in gaming and business.

2. The Introduction of Video Display Controllers (Late 1970s – 1980s)

The 1970s saw the introduction of the first systems capable of dedicated graphical output. The most notable advancement was the Video Display Controller (VDC), a circuit used to generate visual display data, freeing the central processing unit (CPU) from the task of rendering images. Devices like the Atari 2600 and Commodore 64 introduced these concepts into mainstream consumer products, particularly in the realm of gaming.

For example, the Atari 2600’s graphics chipset, the TIA (Television Interface Adapter), could output basic 2D images and colors, laying the groundwork for more advanced graphical processors. This marked the first step toward a clear distinction between CPUs and specialized graphics hardware.

At the same time, early personal computers such as the Apple II and IBM PC were beginning to support more sophisticated visuals through expansion cards. IBM’s Monochrome Display Adapter (MDA), introduced in 1981, was one of the first video cards for the IBM PC, capable of displaying simple text but no graphics. Shortly after, the Color Graphics Adapter (CGA) was introduced, allowing for rudimentary color displays with a palette of four colors at a time.

The Golden Age of 2D Graphics: VGA and SVGA

1. The Arrival of VGA (1987)

The mid-1980s marked a major turning point in the development of graphics hardware with the introduction of IBM’s Video Graphics Array (VGA) in 1987. VGA was a significant leap forward in terms of graphics performance, offering a resolution of 640×480 pixels with 16 colors. It quickly became the industry standard and set the stage for future advancements in 2D graphics.

For the first time, personal computers were capable of displaying detailed images and graphics, not just for games but for business and creative applications like desktop publishing. VGA was backward-compatible with earlier graphics standards (CGA and EGA), making it highly versatile and popular across various computing environments.

VGA’s success spurred the development of higher resolutions and color depths, leading to the introduction of Super VGA (SVGA) in the early 1990s. SVGA offered higher resolutions (800×600 and beyond) and could display up to 256 colors, vastly improving visual fidelity. This period marked the golden age of 2D graphics, setting the stage for the next revolutionary leap: 3D rendering.

2. The Rise of 3D Graphics in Gaming and Beyond (1990s)

The 1990s saw the dawn of 3D graphics, driven largely by the booming video game industry and the growing demand for more immersive and realistic visual experiences. While 2D graphics had their limits, 3D offered the possibility of fully realized, interactive virtual worlds. However, rendering 3D graphics required much more computational power than simple 2D visuals.

This demand led to the development of the first true 3D graphics accelerators. One of the pioneers in this space was 3dfx Interactive, whose Voodoo series of graphics cards, released in 1996, revolutionized gaming. The Voodoo 1 was capable of rendering 3D environments with much higher detail, offering real-time 3D acceleration and transforming the way video games looked and felt. Iconic games like Quake and Tomb Raider showcased the potential of 3D graphics, bringing the first-person shooter and adventure genres to life in unprecedented ways.

NVIDIA also entered the scene in the 1990s with the release of their RIVA series of GPUs. NVIDIA’s products were instrumental in bringing 3D acceleration to mainstream PCs, culminating in the release of the NVIDIA GeForce 256 in 1999, which the company marketed as the world’s first “GPU.”

Modern Graphics Cards: The Age of GPUs

1. The Evolution of the GPU (2000s)

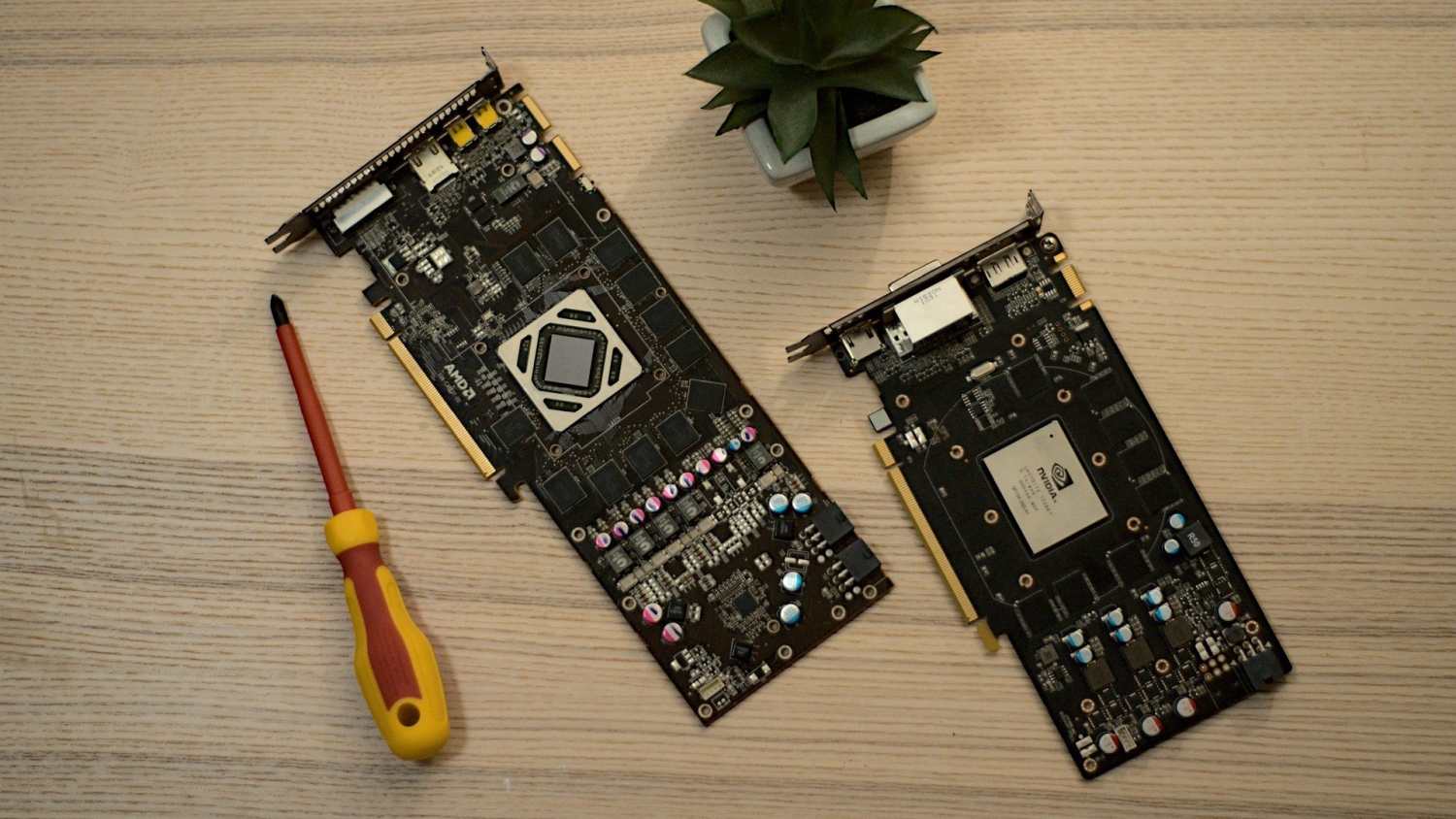

As the 2000s began, the graphics card had firmly established itself as a critical component in the PC ecosystem, not just for gaming, but for a wide array of professional and creative applications. NVIDIA and ATI (now part of AMD) became the two dominant players in the market, with their GeForce and Radeon series respectively. These GPUs continued to push the envelope in terms of performance, visual quality, and computational power.

The 2000s also saw the introduction of new graphics technologies such as shader models, which allowed developers to create more complex lighting, shadows, and visual effects in games and applications. This led to significant improvements in the realism of 3D environments, contributing to the popularity of titles like Half-Life 2 and Crysis, games renowned for their graphical innovations.

2. The Rise of GPU Computing

A major turning point in the history of the GPU came with the realization that its parallel processing capabilities could be applied to tasks beyond graphics rendering. In 2006, NVIDIA introduced CUDA (Compute Unified Device Architecture), a platform that allowed developers to use GPUs for general-purpose computing. This transformed the role of the graphics card, turning it into a powerful tool for scientific simulations, artificial intelligence, and data processing.

GPUs began to find use in a wide range of industries, from medical research and weather forecasting to cryptocurrency mining and machine learning. The rise of AI technologies like deep learning, which requires enormous amounts of computational power, has further cemented the importance of GPUs in modern computing.

The Future of Graphics Cards

As we move into the 2020s, the future of graphics cards is incredibly exciting. Technologies like ray tracing, introduced by NVIDIA’s RTX series, are pushing the boundaries of realism in gaming and film production. Ray tracing allows for more accurate simulation of light, reflections, and shadows, creating visuals that are almost indistinguishable from real life.

Additionally, GPUs are expected to continue playing a central role in fields such as artificial intelligence, where their ability to process large datasets quickly is invaluable. We are also seeing advancements in power efficiency and portability, with the rise of powerful GPUs for laptops and mobile devices.

The graphics card has come a long way from its humble beginnings as a simple pixel processor. Today, it stands as one of the most important technologies driving modern computing forward. Whether you’re gaming, editing videos, or training an AI model, chances are, there’s a GPU at the heart of it all.